Cours connexes :

- Emerging Network Technologies - S9 - SDN et architectures reseau

- Middleware for IoT - S9 - Protocoles IoT

- Embedded IA for IoT - S9 - IA sur Edge

- Service Oriented Architecture - S9 - Architectures de services Cloud

Cloud & Edge Computing - Semestre 9

Annee academique : 2024-2025

Enseignant : Sami Yangui

Categorie : Cloud, Virtualisation, Edge Computing

PART A - Presentation Generale

Vue d'ensemble

Le cours de Cloud & Edge Computing presente les systemes cloud centralises et les solutions edge decentralisees, preparant a repondre aux defis technologiques modernes. Enseigne par Sami Yangui, ce cours explore les technologies de virtualisation, les services cloud et les infrastructures edge. Il met l'accent sur la conception, le deploiement et la gestion d'architectures qui combinent les avantages de faible latence du edge computing avec la scalabilite des environnements cloud.

Cette formation est particulierement pertinente dans le contexte actuel, ou la montee de l'IoT, des applications temps reel et des reseaux necessite une expertise dans ces domaines.

Objectifs pedagogiques :

- Comprendre les fondamentaux de la virtualisation (hyperviseurs Type 1 et Type 2, paravirtualisation)

- Maitriser la conteneurisation avec Docker et l'orchestration avec Kubernetes

- Apprehender les modeles de service cloud (IaaS, PaaS, SaaS)

- Deployer et configurer des environnements OpenStack

- Comprendre le paradigme Edge Computing, Fog Computing et MEC (Multi-access Edge Computing)

- Concevoir des architectures cloud-edge integrees

Position dans le cursus

Ce module s'appuie sur les bases acquises precedemment :

- Interconnexion Reseau (S8) : fondamentaux TCP/IP, routage, VLAN

- Systemes d'Exploitation (S5) : gestion des processus, systemes Unix

- Architecture Materielle (S6) : couche physique, materiels serveurs

Il se connecte directement aux autres cours du semestre :

- Emerging Network Technologies (S9) : SDN et virtualisation reseau

- Middleware for IoT (S9) : protocoles de communication IoT deployes sur le cloud/edge

- Service Oriented Architecture (S9) : architectures de services cloud

PART B - Experience et Contexte

Organisation et ressources

Le module combinait theorie et pratique intensive :

Cours magistraux :

- Introduction au Cloud Computing et ses caracteristiques essentielles

- Technologies de virtualisation (hyperviseurs, conteneurs)

- Modeles de service cloud (IaaS, PaaS, SaaS) et modeles de deploiement

- Architecture OpenStack et ses composants

- Edge Computing, Fog Computing et MEC

- Continuum Cloud-Edge et orchestration

Travaux pratiques :

- TP1 : Configuration de reseaux virtuels avec VirtualBox, routage inter-VM

- TP2 : Creation et gestion de conteneurs Docker, Dockerfiles, volumes

- TP3 : Deploiement d'une infrastructure OpenStack (Nova, Neutron, Glance, Keystone)

- TP4 : Orchestration de conteneurs avec Kubernetes, deploiement de services

Outils utilises :

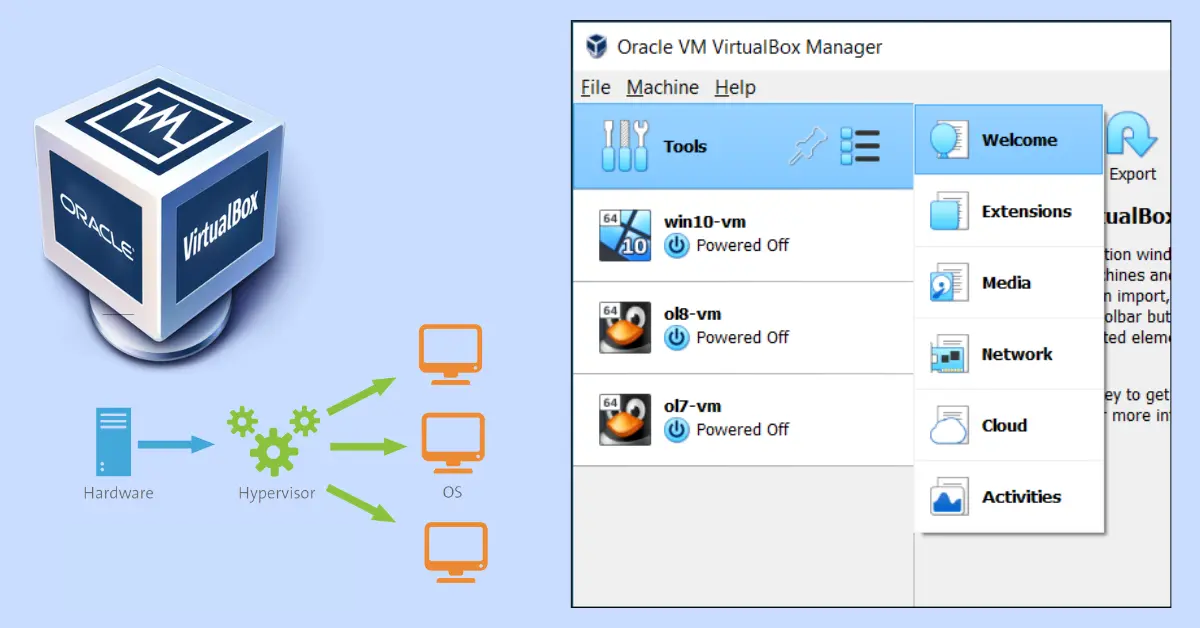

- VirtualBox : virtualisation de type 2 pour les TPs

- Docker : conteneurisation d'applications

- Kubernetes : orchestration de conteneurs

- OpenStack : plateforme cloud open-source (Nova, Neutron, Glance, Keystone, Horizon)

- Linux (Ubuntu) : systeme hote pour les environnements virtualises

Mon role

Dans le cadre de ce cours, j'etais responsable de :

- Apprendre et pratiquer les techniques de virtualisation (VMs et conteneurs)

- Concevoir, deployer et gerer des architectures hybrides combinant cloud et edge computing

- Acquerir des competences avec des outils comme Kubernetes, Docker et VirtualBox

- Rediger un rapport technique complet sur les travaux pratiques realises

Difficultes rencontrees

Configuration reseau VirtualBox :

La mise en place des reseaux virtuels (NAT, bridge, host-only) et la comprehension de leur interaction a necessite du temps et de la methodologie.

Deploiement OpenStack :

OpenStack est une plateforme complexe avec de nombreux composants interdependants. La configuration initiale et la resolution de problemes de connectivite entre services ont ete des defis formateurs.

Kubernetes en temps limite :

La session sur Kubernetes a ete realisee rapidement avec mon binome. Nous avons du executer les commandes sans toujours avoir le temps de comprendre chaque etape en profondeur. Le rapport redige a posteriori nous a permis de mieux assimiler les concepts.

PART C - Aspects Techniques Detailles

1. Fondamentaux de la virtualisation

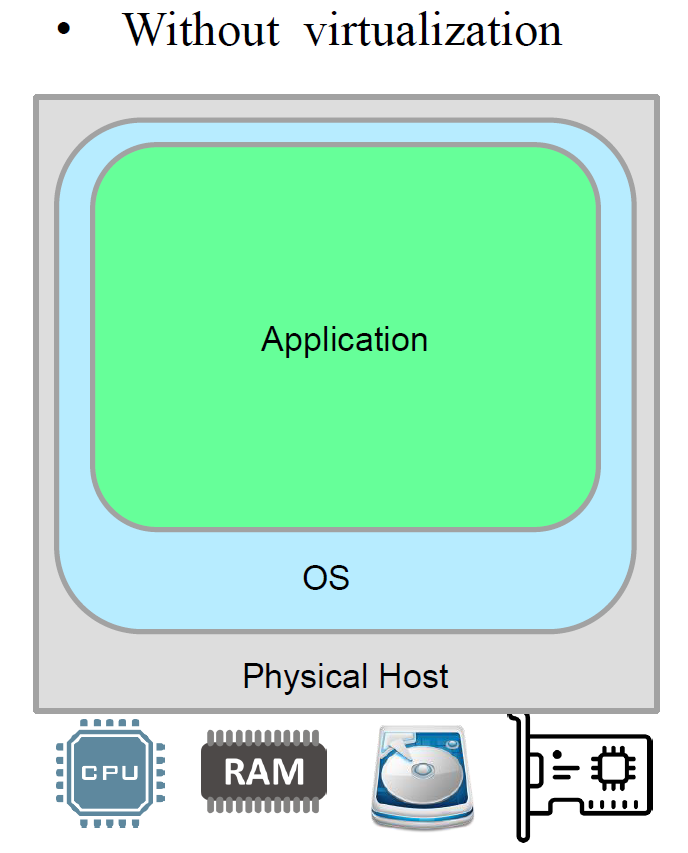

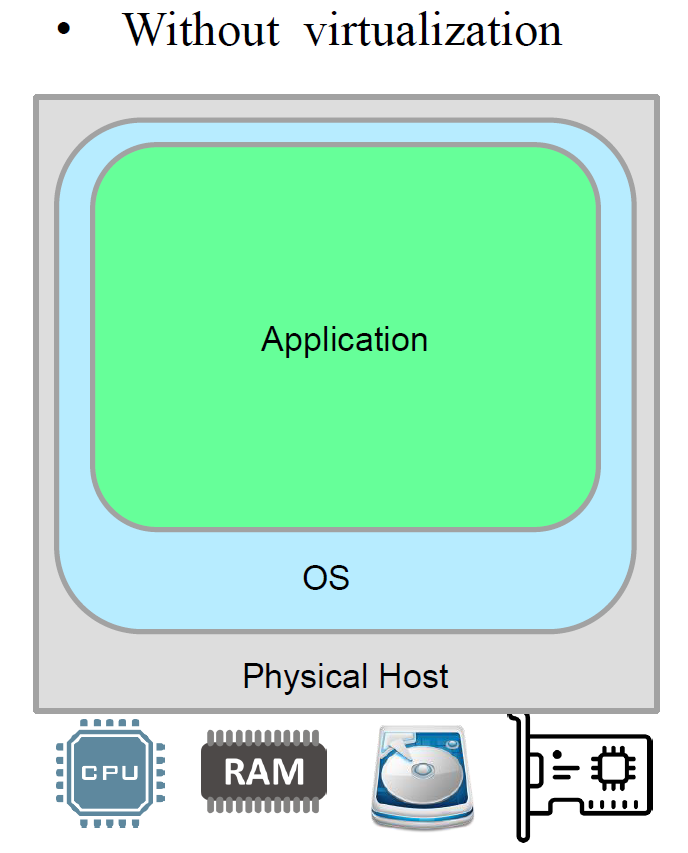

Sans virtualisation :

Dans un environnement sans virtualisation, une seule application s'execute directement sur le systeme d'exploitation hote qui gere le materiel physique. Cette approche presente des limitations en termes d'isolation, de scalabilite et d'utilisation des ressources.

Figure : Architecture sans virtualisation - une seule application sur l'OS hote

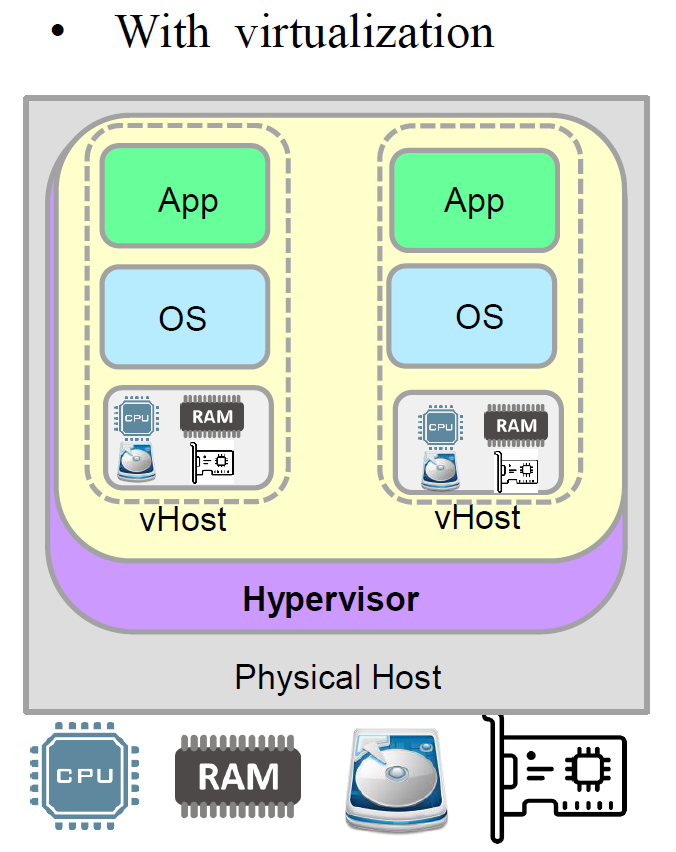

Avec virtualisation :

La virtualisation permet d'executer plusieurs systemes d'exploitation et applications sur un meme materiel physique grace a un hyperviseur. Chaque machine virtuelle (VM) dispose de ses propres ressources virtualisees (CPU, RAM, stockage, reseau).

Figure : Architecture avec virtualisation - plusieurs VMs sur un meme hote physique via un hyperviseur

Avantages de la virtualisation :

- Consolidation de serveurs : reduire le nombre de machines physiques

- Isolation : chaque VM est independante (panne d'une VM ne touche pas les autres)

- Flexibilite : deploiement rapide de nouveaux environnements

- Snapshots et migration : sauvegarde d'etat et migration a chaud

- Optimisation des ressources : meilleure utilisation du materiel

2. Hyperviseurs Type 1 et Type 2

L'hyperviseur est le composant logiciel qui permet la virtualisation. Il existe deux types principaux :

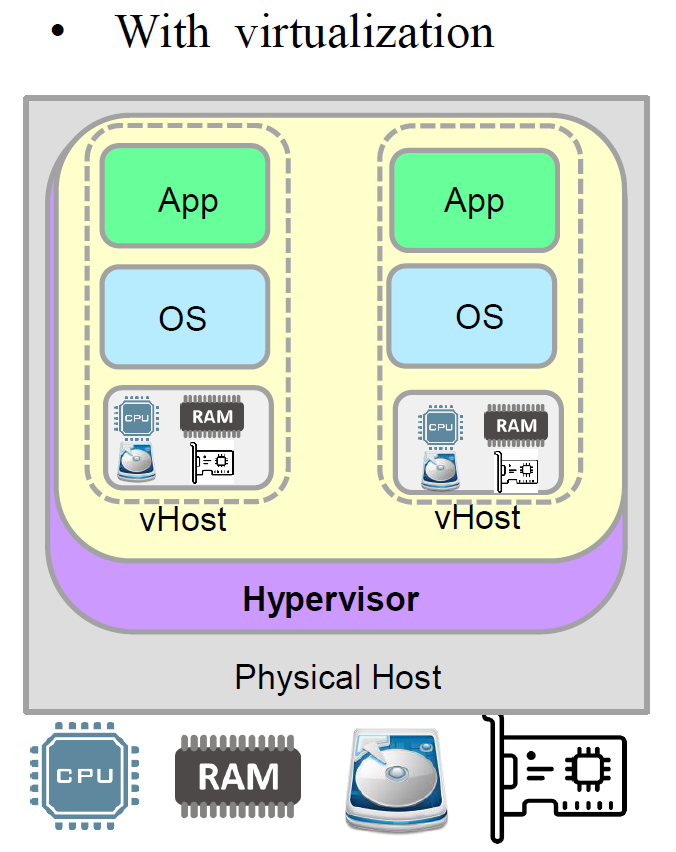

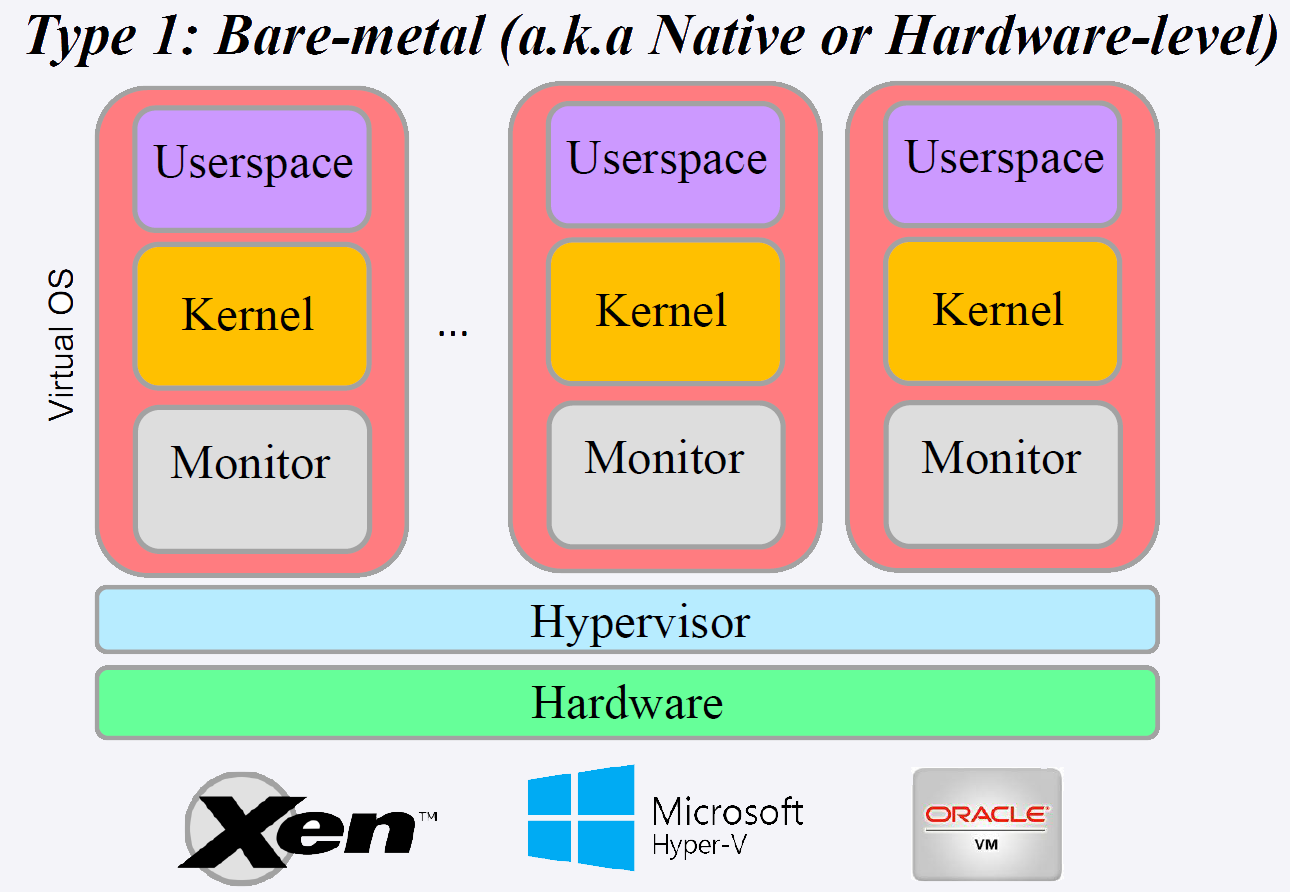

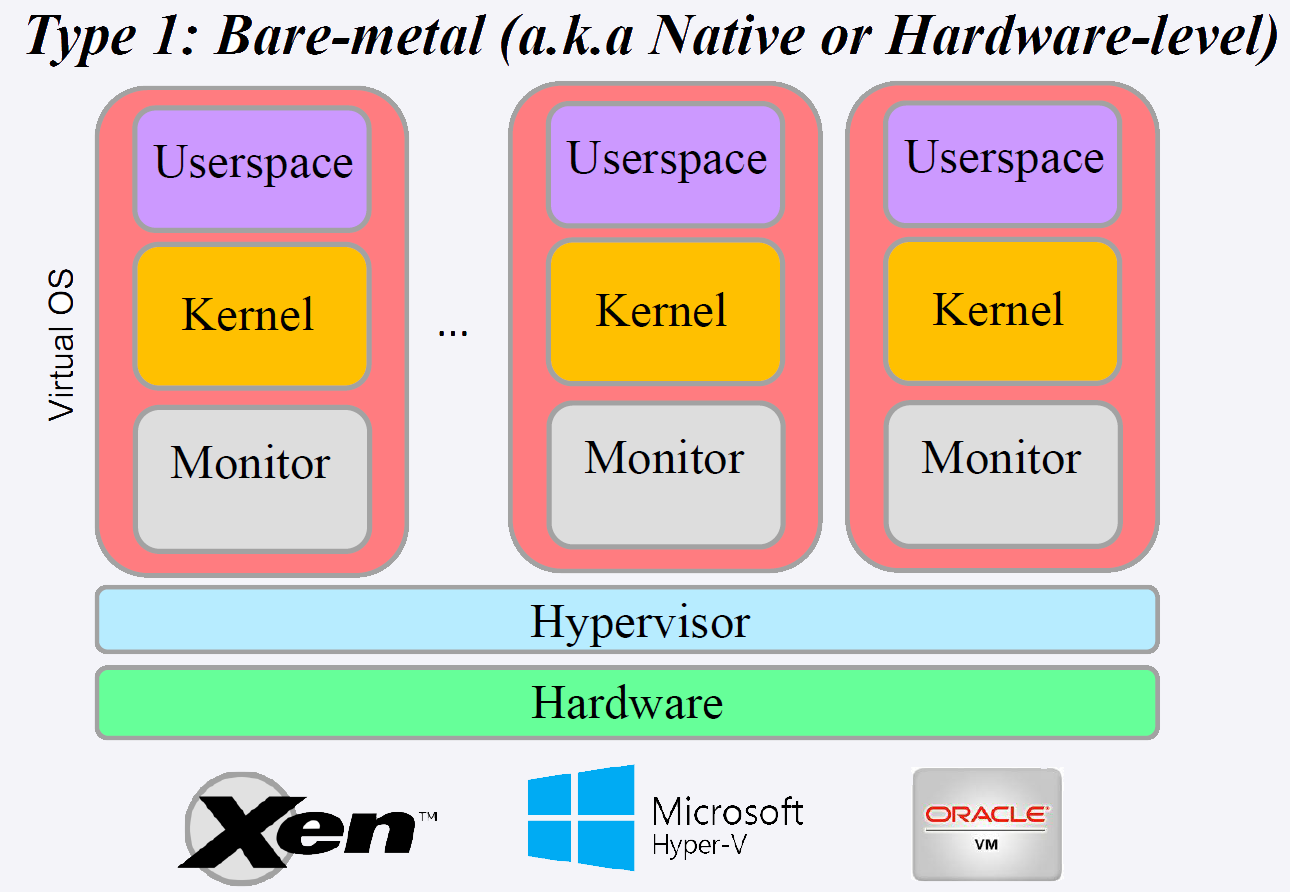

Hyperviseur de Type 1 (Bare Metal) :

L'hyperviseur s'execute directement sur le materiel physique, sans systeme d'exploitation hote intermediaire. Il offre de meilleures performances et une securite accrue car il a un acces direct au materiel.

Exemples : VMware ESXi, Microsoft Hyper-V, Citrix XenServer, KVM

Caracteristiques :

- Performances proches du natif

- Gestion directe des ressources materielles

- Utilise en production dans les datacenters

- Securite renforcee (surface d'attaque reduite)

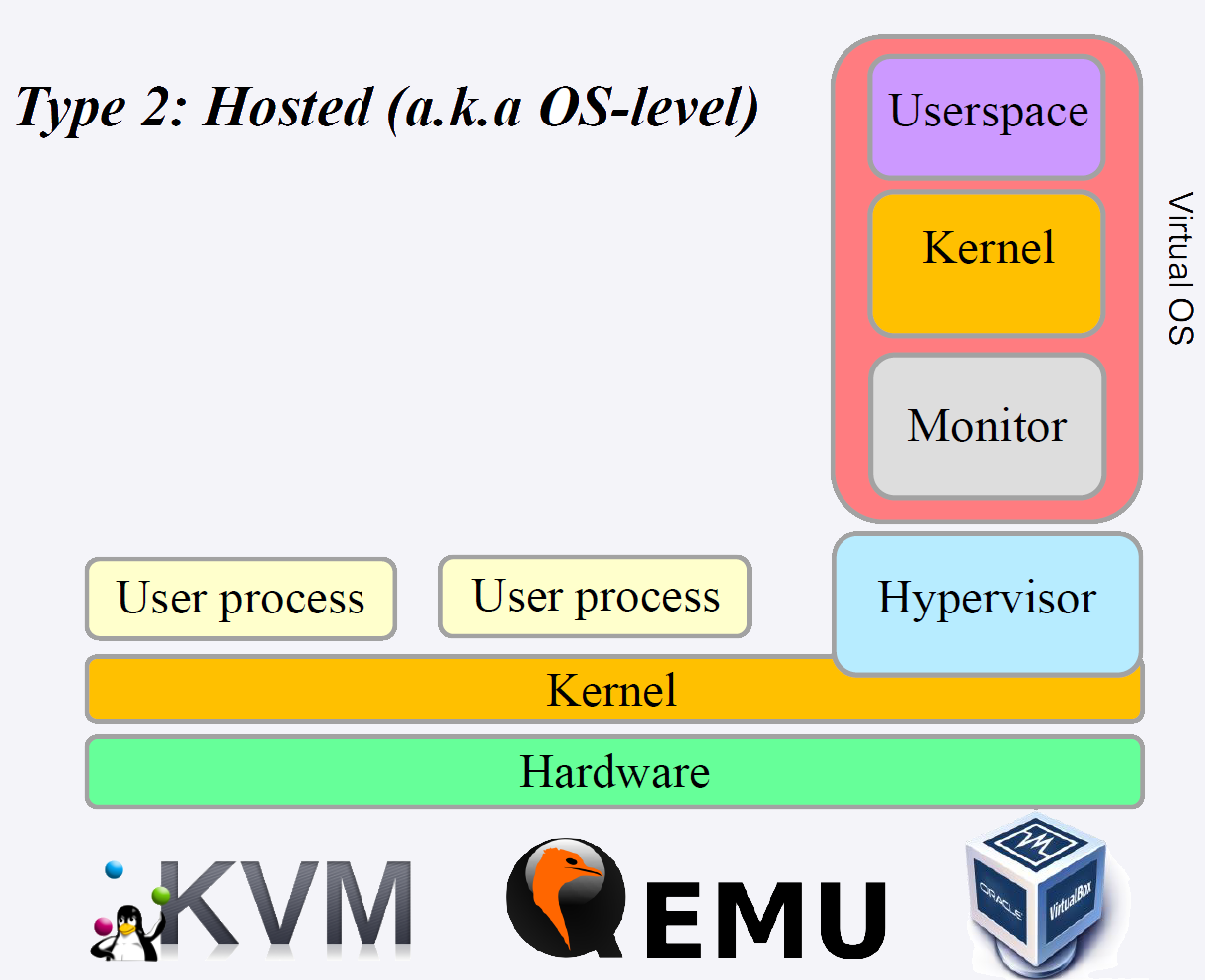

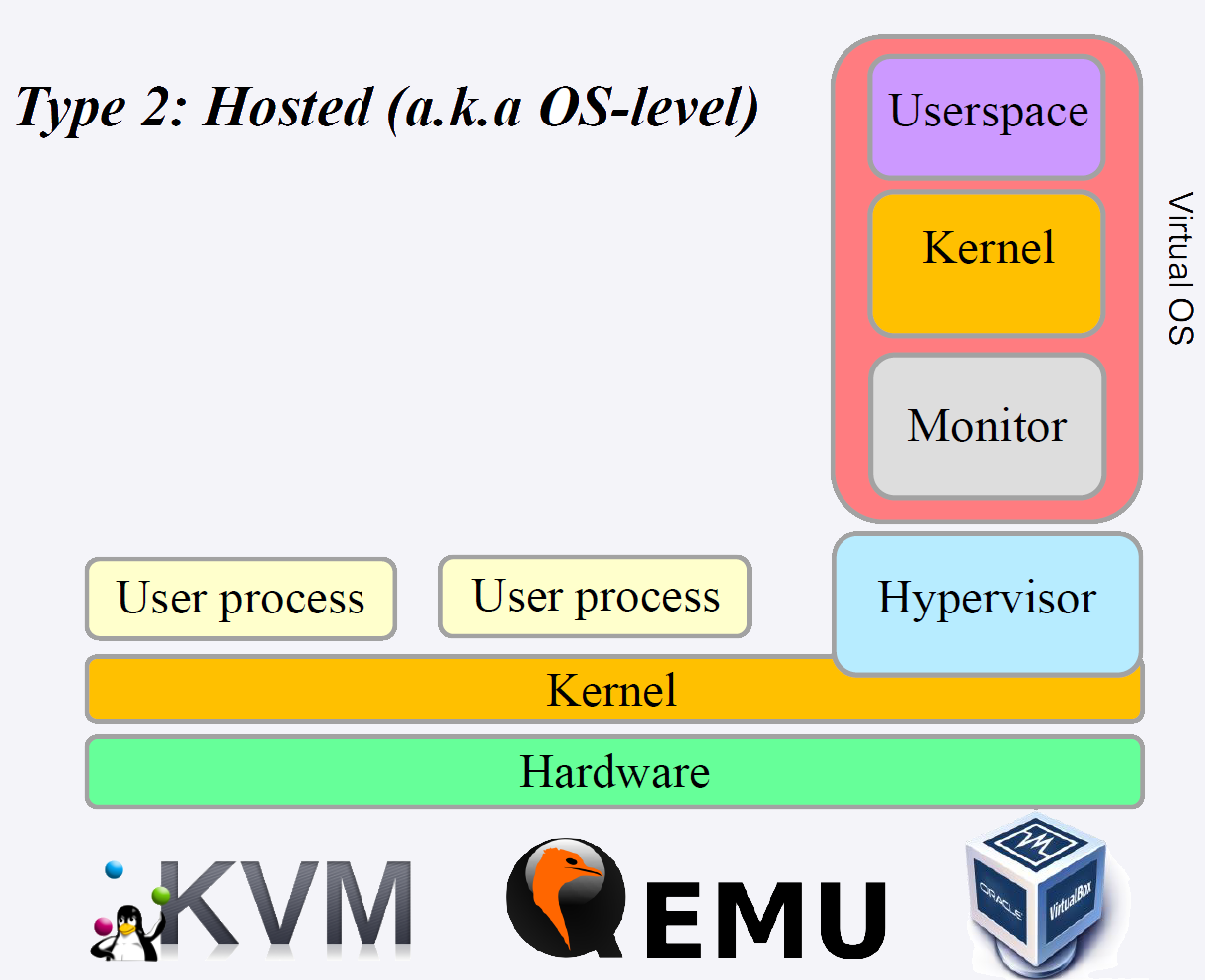

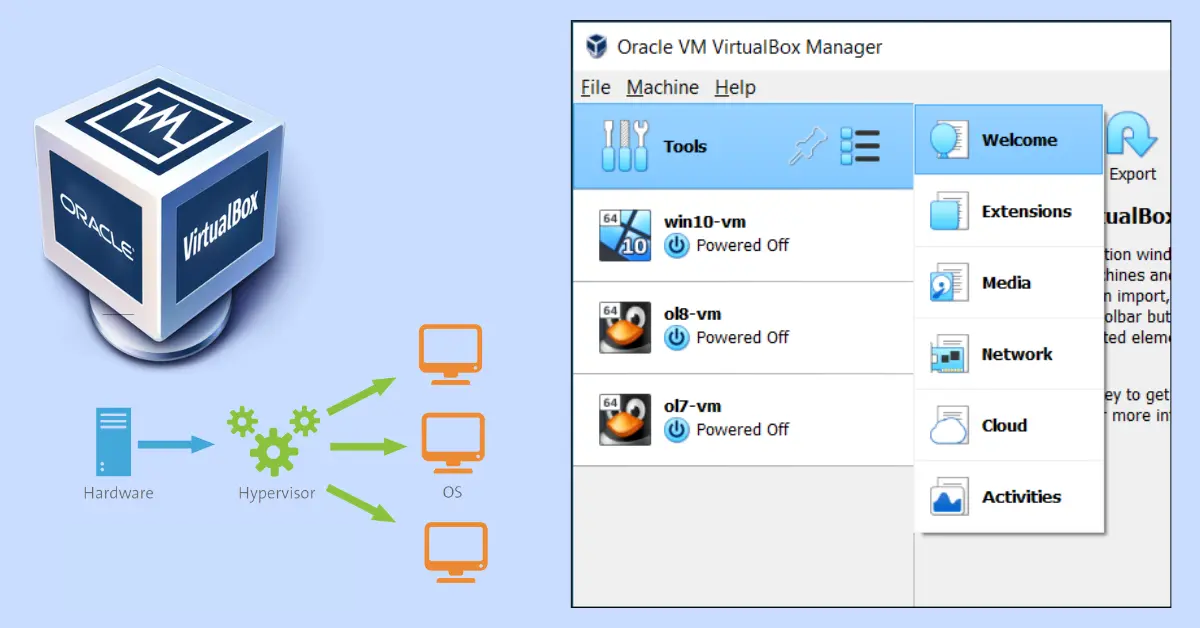

Hyperviseur de Type 2 (Hosted) :

L'hyperviseur s'execute comme une application sur un systeme d'exploitation hote existant. Il est plus simple a installer et a utiliser mais offre des performances legerement inferieures.

Exemples : Oracle VirtualBox, VMware Workstation, Parallels Desktop

Caracteristiques :

- Facile a installer sur un poste de travail

- Ideal pour le developpement et les tests

- Performances reduites (couche OS intermediaire)

- Utilise principalement en environnement desktop

Figure : Hyperviseur Type 1 (Bare Metal)

Figure : Hyperviseur Type 2 (Hosted)

| Critere | Type 1 (Bare Metal) | Type 2 (Hosted) |

|---|---|---|

| Installation | Directement sur le materiel | Sur un OS existant |

| Performance | Elevee | Moderee |

| Usage principal | Datacenters, production | Developpement, tests |

| Securite | Forte (acces direct) | Dependante de l'OS hote |

| Exemples | ESXi, KVM, Hyper-V | VirtualBox, VMware Workstation |

3. Paravirtualisation

La paravirtualisation est une technique ou le systeme d'exploitation invite est modifie pour communiquer directement avec l'hyperviseur via des "hypercalls", au lieu de simuler completement le materiel. Cela ameliore les performances par rapport a la virtualisation complete, car les appels systeme sont optimises pour l'environnement virtualise.

Figure : Solutions de paravirtualisation - OpenNebula, OpenStack, Proxmox

Avantages de la paravirtualisation :

- Performances ameliorees par rapport a la virtualisation complete

- Meilleure gestion des E/S (entrees/sorties)

- Overhead reduit

Inconvenients :

- Necessite la modification du systeme d'exploitation invite

- Compatibilite limitee aux OS modifies

4. Conteneurs vs Machines Virtuelles

Les conteneurs representent une evolution majeure par rapport aux machines virtuelles traditionnelles. Contrairement aux VMs qui virtualisent le materiel complet, les conteneurs partagent le noyau du systeme d'exploitation hote et n'embarquent que les bibliotheques et dependances necessaires a l'application.

Figure : Ecosysteme conteneur - Container Linux, Solaris, Docker

| Critere | Machine Virtuelle | Conteneur |

|---|---|---|

| Isolation | Complete (OS separe) | Au niveau processus |

| Taille | Go (OS complet) | Mo (bibliotheques seulement) |

| Demarrage | Minutes | Secondes |

| Performance | Overhead (hyperviseur) | Proche du natif |

| Portabilite | Limitee | Excellente |

| Densite | ~10-20 VMs par serveur | ~100+ conteneurs par serveur |

| Securite | Forte (isolation materielle) | Moderee (noyau partage) |

Cas d'utilisation des VMs :

- Isolation forte necessaire (multi-tenant, securite)

- OS differents sur un meme hote

- Applications legacy

Cas d'utilisation des conteneurs :

- Microservices

- CI/CD (integration et deploiement continus)

- Applications cloud-native

- Environnements de developpement reproductibles

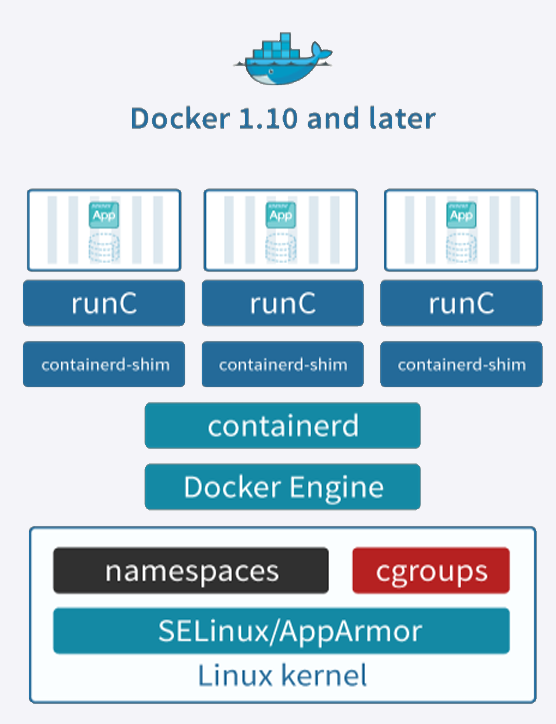

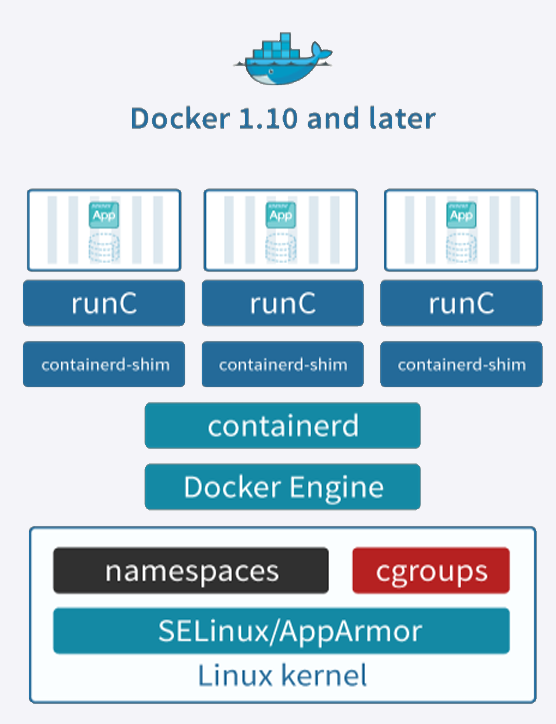

5. Docker et conteneurisation

Docker est la plateforme de conteneurisation la plus populaire. Elle permet de creer, deployer et gerer des conteneurs de maniere efficace. Lors des travaux pratiques, j'ai appris a utiliser Docker pour virtualiser des applications dans des environnements isoles.

Figure : Logo Docker

Architecture Docker :

- Docker Engine : le daemon qui gere les conteneurs

- Docker Image : template read-only contenant l'application et ses dependances

- Docker Container : instance d'execution d'une image

- Dockerfile : fichier de configuration pour construire une image

- Docker Hub : registre public d'images Docker

- Docker Compose : outil pour definir des applications multi-conteneurs

Commandes principales utilisees en TP :

# Telecharger une image depuis Docker Hub

docker pull ubuntu:20.04

# Lancer un conteneur en mode interactif

docker run -it --name mon_conteneur ubuntu:20.04 /bin/bash

# Lister les conteneurs actifs

docker ps

# Lister tous les conteneurs (actifs et arretes)

docker ps -a

# Construire une image a partir d'un Dockerfile

docker build -t mon_image .

# Exposer un port et lancer un conteneur en arriere-plan

docker run -d -p 8080:80 --name serveur_web nginx

# Gerer les volumes pour la persistance

docker run -v /host/data:/container/data mon_image

Exemple de Dockerfile :

FROM ubuntu:20.04

RUN apt-get update && apt-get install -y python3 pip

COPY app.py /app/

WORKDIR /app

EXPOSE 5000

CMD ["python3", "app.py"]

Avantages de Docker :

- Portabilite ("Build once, run anywhere")

- Reproductibilite des environnements

- Isolation des applications

- Demarrage rapide (secondes)

- Ecosysteme riche (Docker Hub, Docker Compose)

6. Modeles de service Cloud : IaaS, PaaS, SaaS

Le Cloud Computing propose differents niveaux d'abstraction pour les services, representes par trois modeles principaux :

Figure : Modeles de service Cloud - IaaS, SaaS, PaaS et leurs niveaux d'abstraction

IaaS (Infrastructure as a Service) :

Fournit les ressources d'infrastructure virtualisees (serveurs, stockage, reseau). L'utilisateur gere l'OS, les middlewares et les applications.

- Exemples : AWS EC2, Google Compute Engine, Azure Virtual Machines, OpenStack

- Responsabilite utilisateur : OS, runtime, applications, donnees

- Responsabilite fournisseur : materiel, virtualisation, reseau

- Flexibilite : maximale (controle total sur l'infrastructure)

PaaS (Platform as a Service) :

Fournit une plateforme de developpement et de deploiement. L'utilisateur se concentre sur le code applicatif sans gerer l'infrastructure sous-jacente.

- Exemples : Google App Engine, Heroku, Azure App Service, Cloud Foundry

- Responsabilite utilisateur : applications et donnees

- Responsabilite fournisseur : OS, runtime, middleware, infrastructure

- Flexibilite : moderee (contraintes de la plateforme)

SaaS (Software as a Service) :

Applications logicielles accessibles via le navigateur, entierement gerees par le fournisseur.

- Exemples : Google Workspace, Microsoft 365, Salesforce, Dropbox

- Responsabilite utilisateur : donnees et parametres

- Responsabilite fournisseur : tout le reste

- Flexibilite : limitee (configuration seulement)

| Aspect | IaaS | PaaS | SaaS |

|---|---|---|---|

| Controle | Total | Partiel | Minimal |

| Complexite | Elevee | Moyenne | Faible |

| Scalabilite | Manuelle/Semi-auto | Automatique | Automatique |

| Cout | Pay-per-use | Pay-per-use | Abonnement |

| Public cible | Admins systeme | Developpeurs | Utilisateurs finaux |

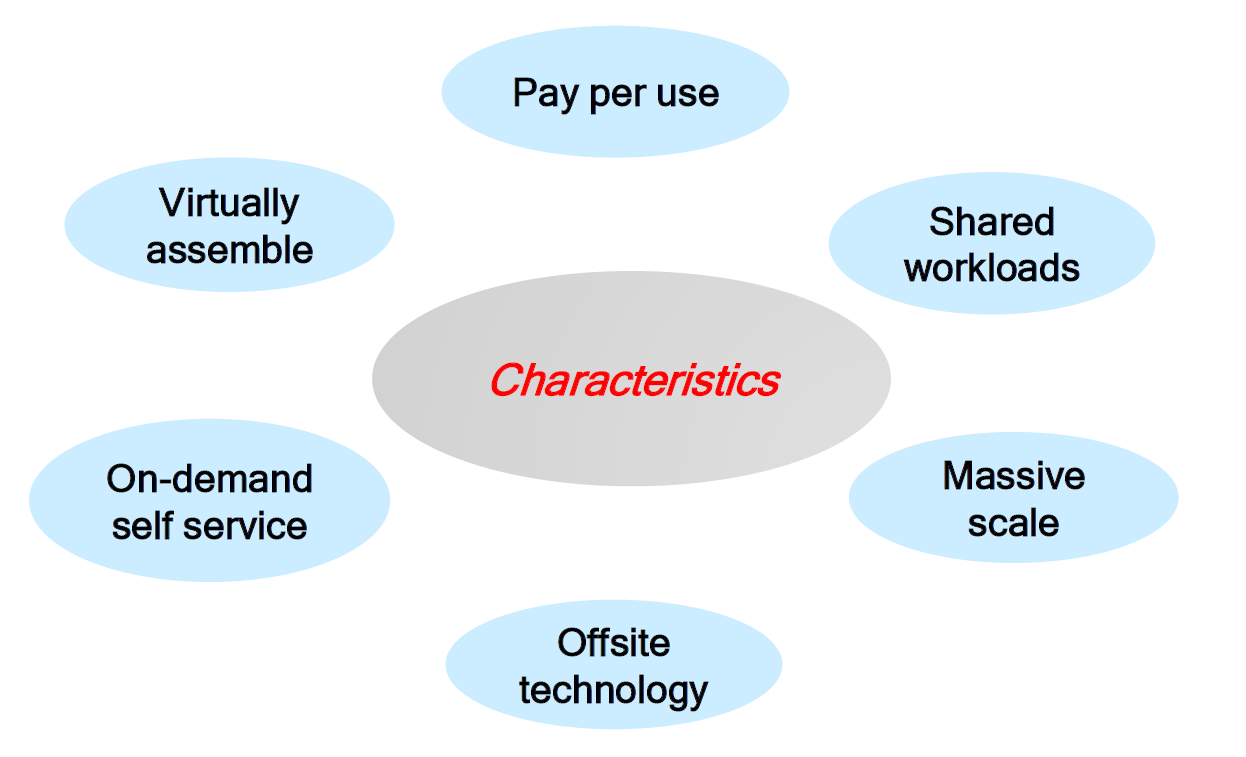

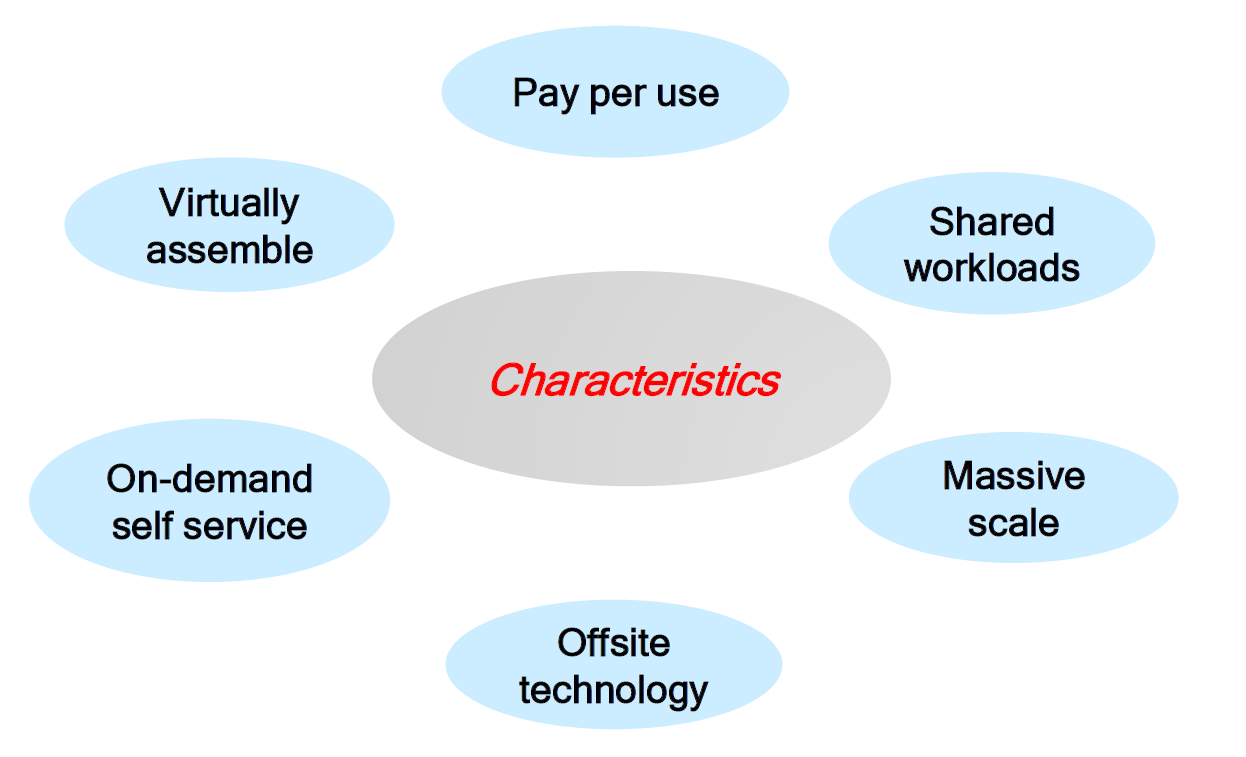

Caracteristiques essentielles du Cloud (NIST) :

Figure : Les caracteristiques essentielles du Cloud Computing selon le NIST

Les cinq caracteristiques essentielles definies par le NIST :

- On-demand self-service : provisionnement automatique sans intervention humaine

- Broad network access : acces via des mecanismes standards (HTTP, API)

- Resource pooling : ressources mutualisees pour plusieurs clients (multi-tenant)

- Rapid elasticity : scalabilite automatique selon la demande

- Measured service : facturation a l'usage (pay-per-use)

Modeles de deploiement Cloud :

- Cloud Public : infrastructure partagee, accessible a tous (AWS, Azure, GCP)

- Cloud Prive : infrastructure dediee a une organisation (OpenStack on-premise)

- Cloud Hybride : combinaison de cloud public et prive

- Cloud Communautaire : partage entre organisations ayant des besoins similaires

7. OpenStack - Plateforme Cloud Open-Source

OpenStack est une plateforme open-source de cloud computing, principalement deployee comme IaaS. Elle permet la gestion de grands pools de ressources de calcul, de stockage et de reseau, le tout administrable via un tableau de bord (Horizon) ou via l'API OpenStack.

Figure : Architecture d'OpenStack et ses composants principaux

Composants principaux d'OpenStack :

| Composant | Nom du projet | Fonction |

|---|---|---|

| Compute | Nova | Gestion des instances de machines virtuelles |

| Networking | Neutron | Reseaux virtuels, sous-reseaux, routeurs, firewalls |

| Image | Glance | Stockage et gestion des images de VMs |

| Identity | Keystone | Authentification, autorisation, catalogue de services |

| Dashboard | Horizon | Interface web d'administration |

| Block Storage | Cinder | Volumes de stockage persistants |

| Object Storage | Swift | Stockage objet distribue |

| Orchestration | Heat | Templates d'infrastructure (Infrastructure as Code) |

Architecture OpenStack :

L'architecture OpenStack suit un modele de services distribues communiquant via des APIs REST. Keystone fournit l'authentification centralisee. Nova gere le cycle de vie des VMs en s'appuyant sur Glance pour les images, Neutron pour le reseau et Cinder pour le stockage.

Manipulations realisees en TP :

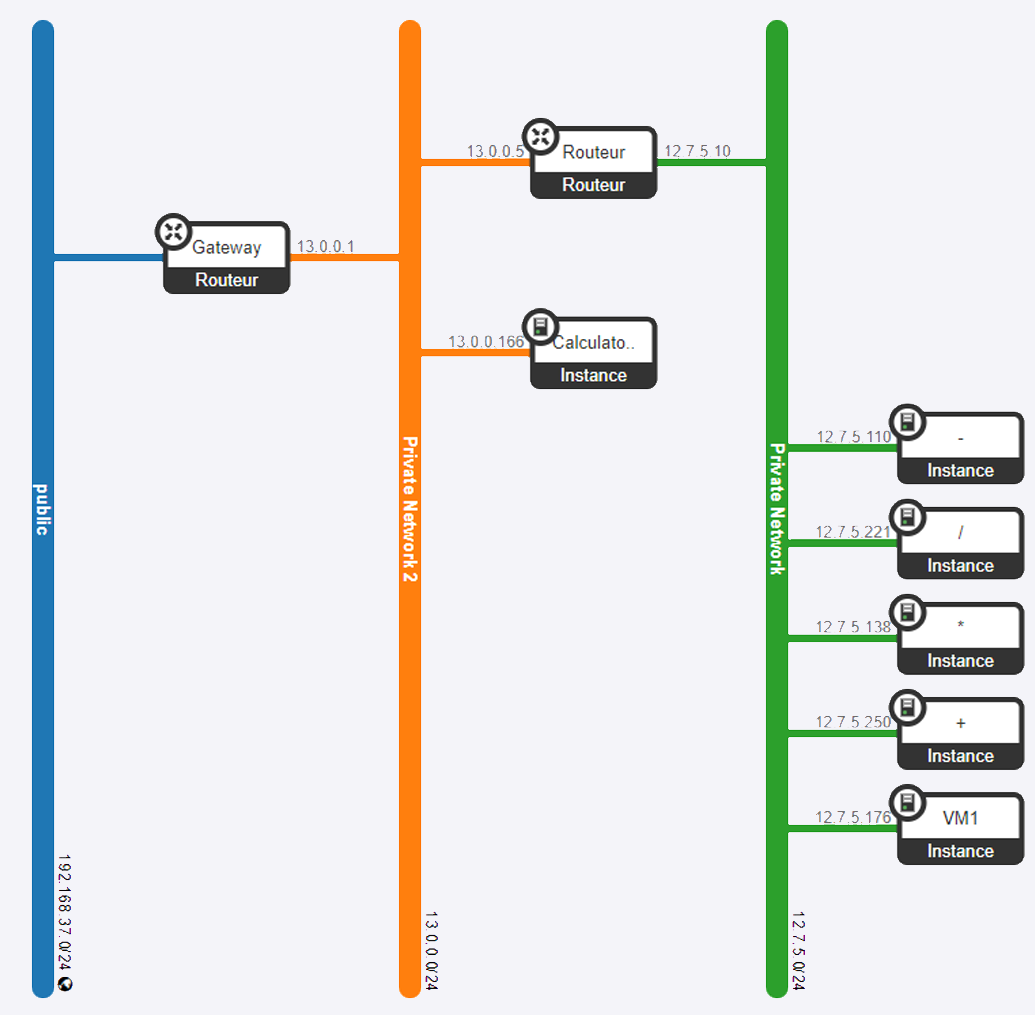

- Creation de reseaux virtuels et sous-reseaux avec Neutron

- Deploiement d'instances VM avec Nova

- Configuration de regles de securite (security groups)

- Utilisation du dashboard Horizon pour l'administration visuelle

- Gestion des images avec Glance

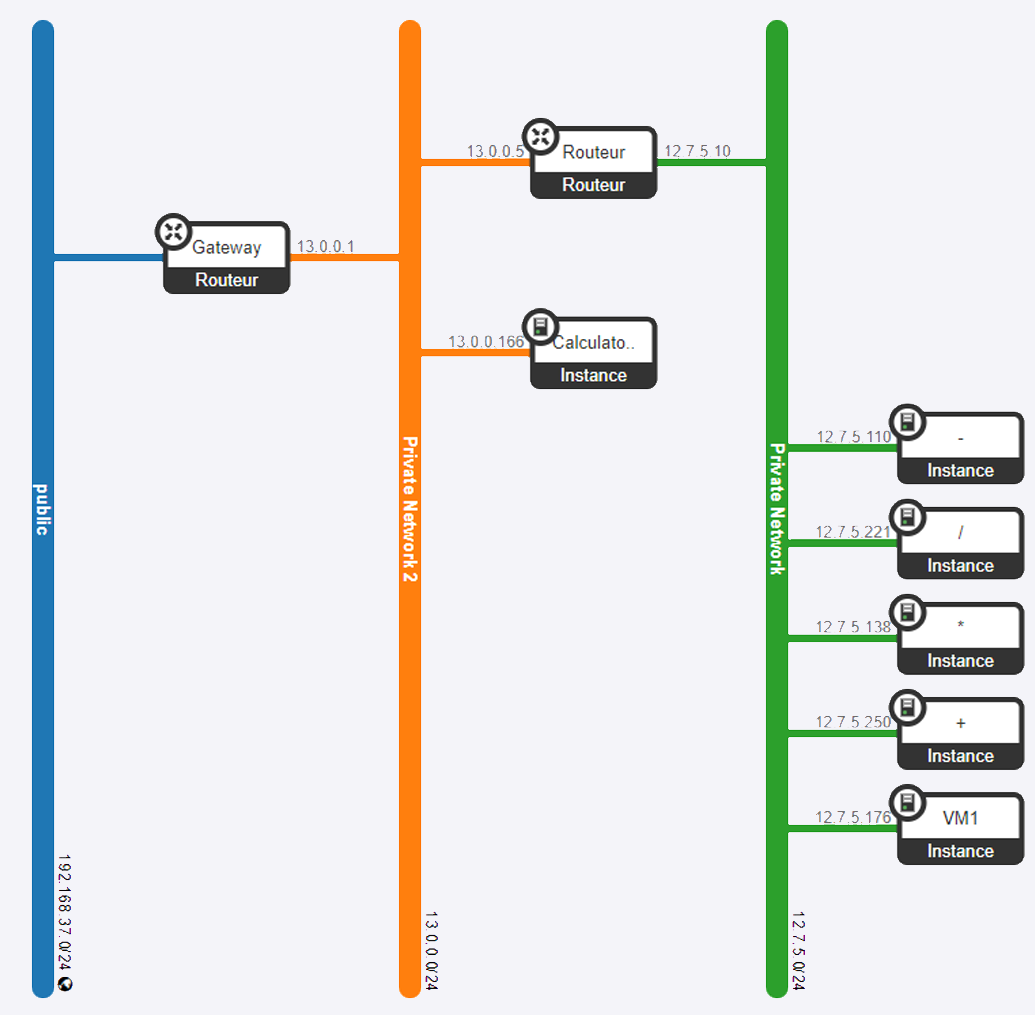

8. Configuration reseau avec VirtualBox

Les travaux pratiques m'ont permis de configurer des reseaux virtuels avec VirtualBox. J'ai appris a creer et configurer des machines virtuelles, tester leur connectivite, et mettre en place des regles de redirection de ports pour permettre la communication entre les VMs et l'hote.

Figure : Configuration reseau avec VirtualBox

Types de reseau VirtualBox configures :

| Mode reseau | Acces Internet | Communication inter-VM | Acces depuis l'hote |

|---|---|---|---|

| NAT | Oui | Non | Via port forwarding |

| Bridged | Oui | Oui | Oui |

| Host-Only | Non | Oui | Oui |

| Internal | Non | Oui (meme reseau) | Non |

Manipulations realisees :

- Configuration de reseaux prives et routeurs pour la communication entre differentes VMs

- Mise en place de regles de port forwarding pour l'acces SSH

- Tests de connectivite (ping, traceroute) entre VMs

- Configuration de sous-reseaux isoles

9. Paradigme Edge Computing

Le Edge Computing est un paradigme qui consiste a traiter les donnees au plus pres de leur source de generation, plutot que de les envoyer systematiquement vers un datacenter cloud centralise. Ce concept est fondamental pour les applications necessitant une faible latence, une bande passante reduite ou une souverainete des donnees.

Motivations du Edge Computing :

- Latence : les applications temps reel (vehicules autonomes, realite augmentee) ne peuvent pas tolerer les delais aller-retour vers le cloud

- Bande passante : les volumes de donnees generes par l'IoT saturent les liens reseau

- Souverainete des donnees : certaines reglementations imposent le traitement local des donnees

- Fiabilite : le fonctionnement doit continuer meme en cas de perte de connexion au cloud

Caracteristiques cles :

- Traitement local des donnees au plus pres des utilisateurs/capteurs

- Reduction de la latence (de ~100ms a <10ms)

- Filtrage et aggregation des donnees avant envoi au cloud

- Fonctionnement en mode deconnecte possible

10. Fog Computing

Le Fog Computing, introduit par Cisco, est une extension du cloud computing qui rapproche les services de calcul, de stockage et de reseau des peripheriques edge. Il se situe comme une couche intermediaire entre les dispositifs IoT (edge) et le cloud centralise.

Differences Fog vs Edge :

| Aspect | Edge Computing | Fog Computing |

|---|---|---|

| Localisation | Sur le dispositif ou tres proche | Entre edge et cloud |

| Capacite de calcul | Limitee | Moderee |

| Latence | Tres faible | Faible |

| Exemples | Capteurs, gateways IoT | Serveurs locaux, routeurs intelligents |

| Scope | Traitement immediat | Aggregation, pre-traitement |

Architecture Fog Computing :

- Couche Edge : capteurs et actionneurs (collecte de donnees brutes)

- Couche Fog : passerelles, serveurs locaux (pre-traitement, filtrage, decisions rapides)

- Couche Cloud : datacenters (analyse approfondie, stockage long terme, machine learning)

11. MEC - Multi-access Edge Computing

Le MEC (Multi-access Edge Computing), standardise par l'ETSI, est un concept qui integre les capacites de calcul au niveau de l'infrastructure des operateurs de telecommunications, typiquement dans les stations de base ou les points d'acces du reseau.

Caracteristiques du MEC :

- Integration avec l'infrastructure reseau des operateurs (4G/5G)

- APIs standardisees pour l'acces aux informations reseau (localisation, QoS)

- Hebergement d'applications tierces au plus pres du reseau d'acces

- Faible latence garantie par la proximite avec les antennes

Cas d'utilisation MEC :

- Vehicules connectes (V2X) : communication ultra-fiable et faible latence

- Realite augmentee/virtuelle : rendu en temps reel

- Gaming cloud : streaming de jeux avec latence minimale

- Video analytics : analyse de flux video en temps reel

- IoT industriel : controle de processus industriels

12. Continuum Cloud-Edge

Le continuum cloud-edge represente une vision unifiee ou les ressources de calcul sont distribuees de maniere continue depuis les dispositifs edge jusqu'au cloud centralise. L'objectif est d'offrir une orchestration transparente qui place automatiquement les traitements la ou ils sont le plus pertinents.

Principes du continuum :

- Placement dynamique : les applications migrent entre edge, fog et cloud selon les besoins (latence, charge, cout)

- Orchestration unifiee : un plan de controle unique gere l'ensemble des ressources

- Heterogeneite : integration de ressources diverses (x86, ARM, GPU, FPGA)

- Elasticite : scalabilite horizontale et verticale a tous les niveaux

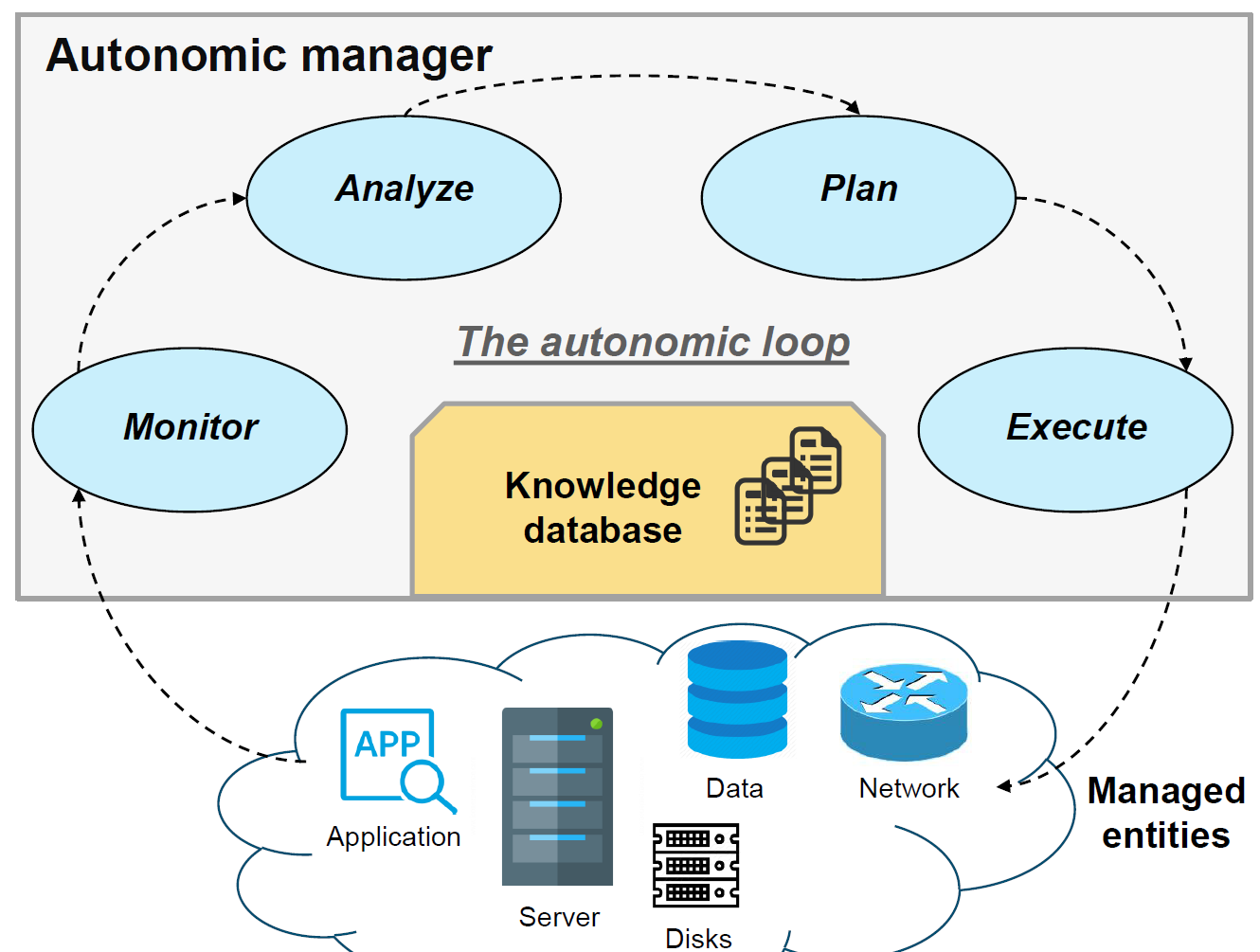

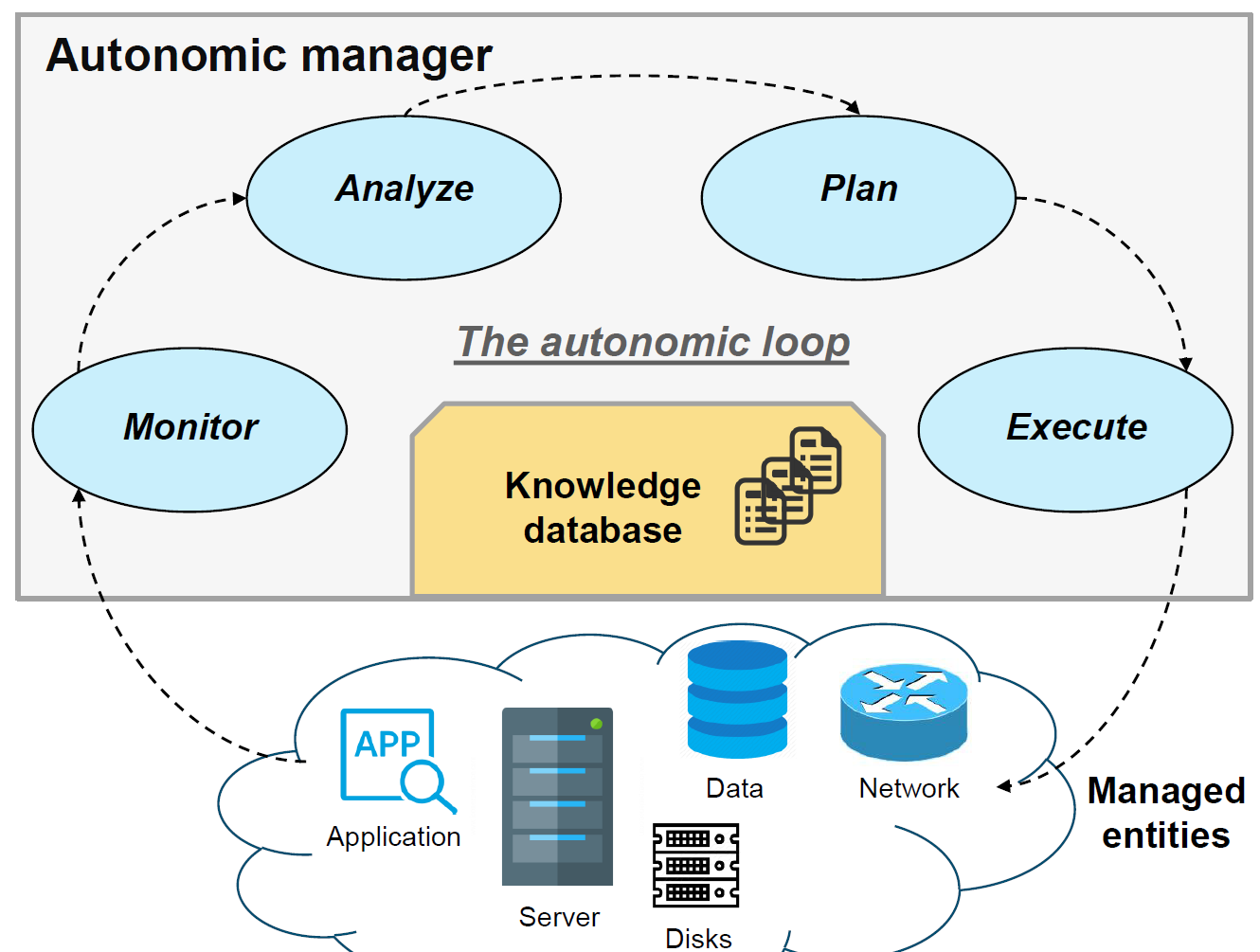

Gestion autonomique :

Figure : Boucle autonomique MAPE-K (Monitor, Analyze, Plan, Execute) pour la gestion du continuum cloud-edge

Le modele MAPE-K (Monitor, Analyze, Plan, Execute - Knowledge) permet une gestion autonomique des ressources du continuum :

- Monitor : collecte des metriques (latence, charge CPU, bande passante)

- Analyze : detection d'anomalies et tendances

- Plan : decision de placement, migration, scaling

- Execute : application des decisions (deploiement, migration de conteneurs)

- Knowledge : base de connaissances partagee alimentant la boucle

13. Orchestration de conteneurs avec Kubernetes

Kubernetes (K8s) est la plateforme standard pour l'orchestration de conteneurs a grande echelle. Elle automatise le deploiement, la mise a l'echelle et la gestion des applications conteneurisees.

Architecture Kubernetes :

Plan de controle (Control Plane) :

- kube-apiserver : point d'entree pour toutes les operations (API REST)

- etcd : base de donnees cle-valeur distribuee (etat du cluster)

- kube-scheduler : placement des pods sur les noeuds

- kube-controller-manager : controleurs qui maintiennent l'etat desire

Noeuds Worker :

- kubelet : agent sur chaque noeud qui gere les pods

- kube-proxy : gestion du reseau et du load balancing

- Container Runtime : Docker, containerd ou CRI-O

Concepts fondamentaux :

| Concept | Description |

|---|---|

| Pod | Unite de deploiement minimale (1+ conteneurs) |

| Deployment | Gestion declarative des pods (replicas, mises a jour) |

| Service | Exposition reseau stable pour un ensemble de pods |

| Namespace | Isolation logique au sein d'un cluster |

| ConfigMap/Secret | Configuration et donnees sensibles |

| PersistentVolume | Stockage persistant pour les pods |

Exemple de deploiement YAML utilise en TP :

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Commandes Kubernetes utilisees en TP :

# Deployer une application

kubectl apply -f deployment.yaml

# Voir les pods en cours d'execution

kubectl get pods

# Voir les services

kubectl get services

# Mettre a l'echelle un deploiement

kubectl scale deployment nginx-deployment --replicas=5

# Voir les logs d'un pod

kubectl logs <nom-du-pod>

# Description detaillee d'un pod

kubectl describe pod <nom-du-pod>

PART D - Analyse et Reflexion

Competences acquises

Virtualisation et conteneurisation :

Maitrise des differences fondamentales entre VMs et conteneurs, des hyperviseurs Type 1 et Type 2, et des outils Docker et VirtualBox. Capacite a choisir la technologie adaptee selon le cas d'usage.

Cloud Computing :

Comprehension des modeles de service (IaaS, PaaS, SaaS) et de deploiement (public, prive, hybride). Experience pratique avec OpenStack pour le deploiement d'une infrastructure cloud.

Edge Computing :

Apprehension du paradigme edge et de ses variantes (Fog Computing, MEC). Comprehension du continuum cloud-edge et des enjeux d'orchestration dans un environnement distribue.

Orchestration :

Competence initiale avec Kubernetes pour le deploiement et la gestion de services conteneurises. Comprehension de l'architecture cluster et des fichiers de configuration YAML.

Points cles a retenir

1. La virtualisation est le socle du cloud :

Sans virtualisation (VMs ou conteneurs), le cloud computing n'existerait pas. Comprendre ces mecanismes est fondamental pour tout ingenieur cloud.

2. Conteneurs et VMs sont complementaires :

Les conteneurs ne remplacent pas les VMs. Chaque technologie a ses cas d'usage. En production, on utilise souvent des conteneurs deployes sur des VMs pour combiner les avantages des deux.

3. Le edge computing repond a des besoins reels :

L'IoT, la 5G et les applications temps reel rendent le edge computing indispensable. Le cloud seul ne suffit plus pour toutes les charges de travail.

4. L'orchestration est essentielle a grande echelle :

Kubernetes est devenu le standard de facto pour gerer des centaines de conteneurs. Maitriser cet outil est une competence tres demandee.

5. Le continuum cloud-edge est l'avenir :

La tendance est a l'integration transparente de toutes les couches (edge, fog, cloud) avec une orchestration unifiee.

Retour d'experience

J'ai developpe de nouvelles competences en architectures hybrides et leurs applications dans les environnements informatiques modernes. J'ai pu comprendre et utiliser l'ensemble des concepts abordes. Cependant, j'ai realise qu'il me faudra pratiquer ces outils a l'avenir pour ne pas les oublier, car je n'ai pas actuellement l'opportunite de les utiliser dans mon entreprise d'apprentissage.

La session sur Kubernetes ayant ete realisee rapidement avec mon binome, nous avons du passer vite et simplement lancer les commandes. Grace au rapport que nous avons redige, il a ete plus simple de comprendre les concepts dans un second temps.

Mon avis

Ce cours etait tres interessant. J'ai eu la chance d'apprendre de nombreux concepts que j'entends dans la vie courante pour certains metiers. Ce cours sera reellement utile pour mon parcours professionnel ou ces concepts pourraient etre appliques.

Le contenu du cours etait bien structure, partant des bases de la virtualisation et progressant graduellement vers des sujets plus complexes. Cette progression m'a aide a mieux comprendre puis a elargir mes connaissances vers des concepts plus avances.

Je me sens desormais plus confiant pour concevoir, deployer et gerer des solutions cloud-edge scalables.

Applications professionnelles :

- Ingenieur DevOps : deploiement d'applications conteneurisees sur Kubernetes, CI/CD

- Architecte Cloud : conception d'infrastructures cloud hybrides et multi-cloud

- Ingenieur IoT : deploiement d'architectures edge pour le traitement local des donnees

- Administrateur systeme : gestion d'infrastructures virtualisees et OpenStack

- Ingenieur Telecom : integration MEC dans les reseaux 5G

Liens avec les autres cours :

- Emerging Network Technologies : SDN pour la virtualisation du reseau dans le cloud

- Middleware for IoT : protocoles IoT deployes sur edge/cloud

- Embedded IA for IoT : inference IA sur les dispositifs edge

- Service Oriented Architecture : microservices deployes dans le cloud

Documents de Cours

Cours Complet Cloud & Edge Computing Full Cloud & Edge Computing Course

Cours complet sur le Cloud & Edge Computing : virtualisation, modeles de service, OpenStack, Edge Computing, Fog Computing, MEC, continuum cloud-edge. Complete course on Cloud & Edge Computing: virtualization, service models, OpenStack, Edge Computing, Fog Computing, MEC, cloud-edge continuum.

Rapports et Projets

Rapport de Projet Cloud Computing Cloud Computing Project Report

Ouvrir le rapport complet Open the full report

Ouvrir le sujet de TP Open the lab subject

Cours suivi en 2024-2025 a l'INSA Toulouse, Departement Genie Electrique et Informatique, specialite ISS.

Related courses:

- Emerging Network Technologies - S9 - SDN and network architectures

- Middleware for IoT - S9 - IoT protocols

- Embedded IA for IoT - S9 - AI on Edge

- Service Oriented Architecture - S9 - Cloud service architectures

Cloud & Edge Computing - Semester 9

Academic year: 2024-2025

Instructor: Sami Yangui

Category: Cloud, Virtualization, Edge Computing

PART A - General Presentation

Overview

The Cloud & Edge Computing course covers centralized cloud systems and decentralized edge solutions, preparing students to address modern technological challenges. Taught by Sami Yangui, this course explores virtualization technologies, cloud services and edge infrastructures. It focuses on designing, deploying and managing architectures that combine the low-latency advantages of edge computing with the scalability of cloud environments.

This training is particularly relevant in the current context, where the rise of IoT, real-time applications and networks requires expertise in these areas.

Learning objectives:

- Understand the fundamentals of virtualization (Type 1 and Type 2 hypervisors, paravirtualization)

- Master containerization with Docker and orchestration with Kubernetes

- Understand cloud service models (IaaS, PaaS, SaaS)

- Deploy and configure OpenStack environments

- Understand the Edge Computing, Fog Computing and MEC (Multi-access Edge Computing) paradigm

- Design integrated cloud-edge architectures

Position in the curriculum

This module builds on previously acquired foundations:

- Network Interconnection (S8): TCP/IP fundamentals, routing, VLAN

- Operating Systems (S5): process management, Unix systems

- Hardware Architecture (S6): physical layer, server hardware

It connects directly to other courses in the semester:

- Emerging Network Technologies (S9): SDN and network virtualization

- Middleware for IoT (S9): IoT communication protocols deployed on cloud/edge

- Service Oriented Architecture (S9): cloud service architectures

PART B - Experience and Context

Organization and resources

The module combined theory and intensive hands-on practice:

Lectures:

- Introduction to Cloud Computing and its essential characteristics

- Virtualization technologies (hypervisors, containers)

- Cloud service models (IaaS, PaaS, SaaS) and deployment models

- OpenStack architecture and its components

- Edge Computing, Fog Computing and MEC

- Cloud-Edge continuum and orchestration

Lab sessions:

- Lab 1: Configuration of virtual networks with VirtualBox, inter-VM routing

- Lab 2: Creation and management of Docker containers, Dockerfiles, volumes

- Lab 3: Deployment of an OpenStack infrastructure (Nova, Neutron, Glance, Keystone)

- Lab 4: Container orchestration with Kubernetes, service deployment

Tools used:

- VirtualBox: Type 2 virtualization for lab sessions

- Docker: application containerization

- Kubernetes: container orchestration

- OpenStack: open-source cloud platform (Nova, Neutron, Glance, Keystone, Horizon)

- Linux (Ubuntu): host system for virtualized environments

My role

As part of this course, I was responsible for:

- Learning and practicing virtualization techniques (VMs and containers)

- Designing, deploying and managing hybrid architectures combining cloud and edge computing

- Acquiring skills with tools such as Kubernetes, Docker and VirtualBox

- Writing a comprehensive technical report on the lab work completed

Challenges encountered

VirtualBox network configuration:

Setting up virtual networks (NAT, bridge, host-only) and understanding their interactions required time and methodology.

OpenStack deployment:

OpenStack is a complex platform with many interdependent components. The initial configuration and troubleshooting connectivity issues between services were formative challenges.

Kubernetes under time constraints:

The Kubernetes session was completed quickly with my partner. We had to execute commands without always having time to understand each step in depth. The report written afterwards helped us better absorb the concepts.

PART C - Detailed Technical Aspects

1. Virtualization fundamentals

Without virtualization:

In an environment without virtualization, a single application runs directly on the host operating system which manages the physical hardware. This approach has limitations in terms of isolation, scalability and resource utilization.

Figure: Architecture without virtualization - a single application on the host OS

With virtualization:

Virtualization allows running multiple operating systems and applications on the same physical hardware through a hypervisor. Each virtual machine (VM) has its own virtualized resources (CPU, RAM, storage, network).

Figure: Architecture with virtualization - multiple VMs on a single physical host via a hypervisor

Advantages of virtualization:

- Server consolidation: reduce the number of physical machines

- Isolation: each VM is independent (failure of one VM does not affect the others)

- Flexibility: rapid deployment of new environments

- Snapshots and migration: state backup and live migration

- Resource optimization: better hardware utilization

2. Type 1 and Type 2 Hypervisors

The hypervisor is the software component that enables virtualization. There are two main types:

Type 1 Hypervisor (Bare Metal):

The hypervisor runs directly on the physical hardware, without an intermediate host operating system. It offers better performance and enhanced security as it has direct access to the hardware.

Examples: VMware ESXi, Microsoft Hyper-V, Citrix XenServer, KVM

Characteristics:

- Near-native performance

- Direct management of hardware resources

- Used in production in datacenters

- Enhanced security (reduced attack surface)

Type 2 Hypervisor (Hosted):

The hypervisor runs as an application on an existing host operating system. It is simpler to install and use but offers slightly lower performance.

Examples: Oracle VirtualBox, VMware Workstation, Parallels Desktop

Characteristics:

- Easy to install on a workstation

- Ideal for development and testing

- Reduced performance (intermediate OS layer)

- Primarily used in desktop environments

Figure: Type 1 Hypervisor (Bare Metal)

Figure: Type 2 Hypervisor (Hosted)

| Criterion | Type 1 (Bare Metal) | Type 2 (Hosted) |

|---|---|---|

| Installation | Directly on hardware | On an existing OS |

| Performance | High | Moderate |

| Primary use | Datacenters, production | Development, testing |

| Security | Strong (direct access) | Dependent on host OS |

| Examples | ESXi, KVM, Hyper-V | VirtualBox, VMware Workstation |

3. Paravirtualization

Paravirtualization is a technique where the guest operating system is modified to communicate directly with the hypervisor through "hypercalls", instead of fully simulating the hardware. This improves performance compared to full virtualization, as system calls are optimized for the virtualized environment.

Figure: Paravirtualization solutions - OpenNebula, OpenStack, Proxmox

Advantages of paravirtualization:

- Improved performance compared to full virtualization

- Better I/O management (input/output)

- Reduced overhead

Disadvantages:

- Requires modification of the guest operating system

- Compatibility limited to modified OSes

4. Containers vs Virtual Machines

Containers represent a major evolution compared to traditional virtual machines. Unlike VMs which virtualize the complete hardware, containers share the host operating system kernel and only include the libraries and dependencies necessary for the application.

Figure: Container ecosystem - Container Linux, Solaris, Docker

| Criterion | Virtual Machine | Container |

|---|---|---|

| Isolation | Complete (separate OS) | Process-level |

| Size | GB (complete OS) | MB (libraries only) |

| Startup | Minutes | Seconds |

| Performance | Overhead (hypervisor) | Near-native |

| Portability | Limited | Excellent |

| Density | ~10-20 VMs per server | ~100+ containers per server |

| Security | Strong (hardware isolation) | Moderate (shared kernel) |

VM use cases:

- Strong isolation needed (multi-tenant, security)

- Different OSes on the same host

- Legacy applications

Container use cases:

- Microservices

- CI/CD (continuous integration and deployment)

- Cloud-native applications

- Reproducible development environments

5. Docker and containerization

Docker is the most popular containerization platform. It allows creating, deploying and managing containers efficiently. During the lab sessions, I learned to use Docker to virtualize applications in isolated environments.

Figure: Docker Logo

Docker architecture:

- Docker Engine: the daemon that manages containers

- Docker Image: read-only template containing the application and its dependencies

- Docker Container: running instance of an image

- Dockerfile: configuration file for building an image

- Docker Hub: public Docker image registry

- Docker Compose: tool for defining multi-container applications

Main commands used in lab sessions:

# Download an image from Docker Hub

docker pull ubuntu:20.04

# Launch a container in interactive mode

docker run -it --name mon_conteneur ubuntu:20.04 /bin/bash

# List active containers

docker ps

# List all containers (active and stopped)

docker ps -a

# Build an image from a Dockerfile

docker build -t mon_image .

# Expose a port and launch a container in the background

docker run -d -p 8080:80 --name serveur_web nginx

# Manage volumes for persistence

docker run -v /host/data:/container/data mon_image

Dockerfile example:

FROM ubuntu:20.04

RUN apt-get update && apt-get install -y python3 pip

COPY app.py /app/

WORKDIR /app

EXPOSE 5000

CMD ["python3", "app.py"]

Advantages of Docker:

- Portability ("Build once, run anywhere")

- Environment reproducibility

- Application isolation

- Fast startup (seconds)

- Rich ecosystem (Docker Hub, Docker Compose)

6. Cloud service models: IaaS, PaaS, SaaS

Cloud Computing offers different levels of abstraction for services, represented by three main models:

Figure: Cloud service models - IaaS, SaaS, PaaS and their abstraction levels

IaaS (Infrastructure as a Service):

Provides virtualized infrastructure resources (servers, storage, network). The user manages the OS, middleware and applications.

- Examples: AWS EC2, Google Compute Engine, Azure Virtual Machines, OpenStack

- User responsibility: OS, runtime, applications, data

- Provider responsibility: hardware, virtualization, network

- Flexibility: maximum (full control over infrastructure)

PaaS (Platform as a Service):

Provides a development and deployment platform. The user focuses on application code without managing the underlying infrastructure.

- Examples: Google App Engine, Heroku, Azure App Service, Cloud Foundry

- User responsibility: applications and data

- Provider responsibility: OS, runtime, middleware, infrastructure

- Flexibility: moderate (platform constraints)

SaaS (Software as a Service):

Software applications accessible via the browser, fully managed by the provider.

- Examples: Google Workspace, Microsoft 365, Salesforce, Dropbox

- User responsibility: data and settings

- Provider responsibility: everything else

- Flexibility: limited (configuration only)

| Aspect | IaaS | PaaS | SaaS |

|---|---|---|---|

| Control | Full | Partial | Minimal |

| Complexity | High | Medium | Low |

| Scalability | Manual/Semi-auto | Automatic | Automatic |

| Cost | Pay-per-use | Pay-per-use | Subscription |

| Target audience | System admins | Developers | End users |

Essential Cloud characteristics (NIST):

Figure: Essential characteristics of Cloud Computing according to NIST

The five essential characteristics defined by NIST:

- On-demand self-service: automatic provisioning without human intervention

- Broad network access: access via standard mechanisms (HTTP, API)

- Resource pooling: pooled resources for multiple clients (multi-tenant)

- Rapid elasticity: automatic scalability according to demand

- Measured service: usage-based billing (pay-per-use)

Cloud deployment models:

- Public Cloud: shared infrastructure, accessible to all (AWS, Azure, GCP)

- Private Cloud: infrastructure dedicated to an organization (OpenStack on-premise)

- Hybrid Cloud: combination of public and private cloud

- Community Cloud: shared among organizations with similar needs

7. OpenStack - Open-Source Cloud Platform

OpenStack is an open-source cloud computing platform, primarily deployed as IaaS. It enables the management of large pools of compute, storage and network resources, all manageable via a dashboard (Horizon) or via the OpenStack API.

Figure: OpenStack architecture and its main components

Main OpenStack components:

| Component | Project name | Function |

|---|---|---|

| Compute | Nova | Virtual machine instance management |

| Networking | Neutron | Virtual networks, subnets, routers, firewalls |

| Image | Glance | VM image storage and management |

| Identity | Keystone | Authentication, authorization, service catalog |

| Dashboard | Horizon | Web administration interface |

| Block Storage | Cinder | Persistent storage volumes |

| Object Storage | Swift | Distributed object storage |

| Orchestration | Heat | Infrastructure templates (Infrastructure as Code) |

OpenStack architecture:

The OpenStack architecture follows a distributed services model communicating via REST APIs. Keystone provides centralized authentication. Nova manages the VM lifecycle relying on Glance for images, Neutron for networking and Cinder for storage.

Lab work performed:

- Creation of virtual networks and subnets with Neutron

- Deployment of VM instances with Nova

- Configuration of security rules (security groups)

- Use of the Horizon dashboard for visual administration

- Image management with Glance

8. Network configuration with VirtualBox

The lab sessions allowed me to configure virtual networks with VirtualBox. I learned to create and configure virtual machines, test their connectivity, and set up port forwarding rules to enable communication between VMs and the host.

Figure: Network configuration with VirtualBox

VirtualBox network types configured:

| Network mode | Internet access | Inter-VM communication | Access from host |

|---|---|---|---|

| NAT | Yes | No | Via port forwarding |

| Bridged | Yes | Yes | Yes |

| Host-Only | No | Yes | Yes |

| Internal | No | Yes (same network) | No |

Lab work performed:

- Configuration of private networks and routers for communication between different VMs

- Setting up port forwarding rules for SSH access

- Connectivity testing (ping, traceroute) between VMs

- Configuration of isolated subnets

9. Edge Computing paradigm

Edge Computing is a paradigm that consists of processing data as close as possible to its source of generation, rather than systematically sending it to a centralized cloud datacenter. This concept is fundamental for applications requiring low latency, reduced bandwidth or data sovereignty.

Edge Computing motivations:

- Latency: real-time applications (autonomous vehicles, augmented reality) cannot tolerate round-trip delays to the cloud

- Bandwidth: the volumes of data generated by IoT saturate network links

- Data sovereignty: some regulations require local data processing

- Reliability: operation must continue even in case of loss of cloud connection

Key characteristics:

- Local data processing as close as possible to users/sensors

- Latency reduction (from ~100ms to <10ms)

- Data filtering and aggregation before sending to the cloud

- Disconnected mode operation possible

10. Fog Computing

Fog Computing, introduced by Cisco, is an extension of cloud computing that brings compute, storage and network services closer to edge devices. It sits as an intermediate layer between IoT devices (edge) and the centralized cloud.

Fog vs Edge differences:

| Aspect | Edge Computing | Fog Computing |

|---|---|---|

| Location | On the device or very close | Between edge and cloud |

| Compute capacity | Limited | Moderate |

| Latency | Very low | Low |

| Examples | Sensors, IoT gateways | Local servers, smart routers |

| Scope | Immediate processing | Aggregation, pre-processing |

Fog Computing architecture:

- Edge layer: sensors and actuators (raw data collection)

- Fog layer: gateways, local servers (pre-processing, filtering, quick decisions)

- Cloud layer: datacenters (in-depth analysis, long-term storage, machine learning)

11. MEC - Multi-access Edge Computing

MEC (Multi-access Edge Computing), standardized by ETSI, is a concept that integrates computing capabilities at the level of telecommunications operators' infrastructure, typically in base stations or network access points.

MEC characteristics:

- Integration with operators' network infrastructure (4G/5G)

- Standardized APIs for accessing network information (location, QoS)

- Hosting of third-party applications as close as possible to the access network

- Low latency guaranteed by proximity to antennas

MEC use cases:

- Connected vehicles (V2X): ultra-reliable and low-latency communication

- Augmented/virtual reality: real-time rendering

- Cloud gaming: game streaming with minimal latency

- Video analytics: real-time video stream analysis

- Industrial IoT: industrial process control

12. Cloud-Edge continuum

The cloud-edge continuum represents a unified vision where computing resources are continuously distributed from edge devices to the centralized cloud. The goal is to provide seamless orchestration that automatically places processing where it is most relevant.

Continuum principles:

- Dynamic placement: applications migrate between edge, fog and cloud according to needs (latency, load, cost)

- Unified orchestration: a single control plane manages all resources

- Heterogeneity: integration of diverse resources (x86, ARM, GPU, FPGA)

- Elasticity: horizontal and vertical scalability at all levels

Autonomic management:

Figure: MAPE-K autonomic loop (Monitor, Analyze, Plan, Execute) for cloud-edge continuum management

The MAPE-K model (Monitor, Analyze, Plan, Execute - Knowledge) enables autonomic management of continuum resources:

- Monitor: metric collection (latency, CPU load, bandwidth)

- Analyze: anomaly and trend detection

- Plan: placement, migration, scaling decisions

- Execute: application of decisions (deployment, container migration)

- Knowledge: shared knowledge base feeding the loop

13. Container orchestration with Kubernetes

Kubernetes (K8s) is the standard platform for large-scale container orchestration. It automates the deployment, scaling and management of containerized applications.

Kubernetes architecture:

Control Plane:

- kube-apiserver: entry point for all operations (REST API)

- etcd: distributed key-value database (cluster state)

- kube-scheduler: pod placement on nodes

- kube-controller-manager: controllers that maintain the desired state

Worker Nodes:

- kubelet: agent on each node that manages pods

- kube-proxy: network and load balancing management

- Container Runtime: Docker, containerd or CRI-O

Fundamental concepts:

| Concept | Description |

|---|---|

| Pod | Minimum deployment unit (1+ containers) |

| Deployment | Declarative pod management (replicas, updates) |

| Service | Stable network exposure for a set of pods |

| Namespace | Logical isolation within a cluster |

| ConfigMap/Secret | Configuration and sensitive data |

| PersistentVolume | Persistent storage for pods |

YAML deployment example used in lab:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

Kubernetes commands used in lab:

```bash # Deploy an application kubectl apply -f deployment.yaml # View running pods kubectl get pods # View services kubectl get services # Scale a deployment kubectl scale deployment nginx-deployment --replicas=5 # View pod logs kubectl logsPART D - Analysis and Reflection

Skills acquired

Virtualization and containerization:

Mastery of the fundamental differences between VMs and containers, Type 1 and Type 2 hypervisors, and Docker and VirtualBox tools. Ability to choose the appropriate technology according to the use case.

Cloud Computing:

Understanding of service models (IaaS, PaaS, SaaS) and deployment models (public, private, hybrid). Hands-on experience with OpenStack for deploying a cloud infrastructure.

Edge Computing:

Understanding of the edge paradigm and its variants (Fog Computing, MEC). Understanding of the cloud-edge continuum and orchestration challenges in a distributed environment.

Orchestration:

Initial competency with Kubernetes for deploying and managing containerized services. Understanding of cluster architecture and YAML configuration files.

Key takeaways

1. Virtualization is the foundation of the cloud:

Without virtualization (VMs or containers), cloud computing would not exist. Understanding these mechanisms is fundamental for any cloud engineer.

2. Containers and VMs are complementary:

Containers do not replace VMs. Each technology has its use cases. In production, containers are often deployed on VMs to combine the advantages of both.

3. Edge computing addresses real needs:

IoT, 5G and real-time applications make edge computing essential. The cloud alone is no longer sufficient for all workloads.

4. Orchestration is essential at scale:

Kubernetes has become the de facto standard for managing hundreds of containers. Mastering this tool is a highly sought-after skill.

5. The cloud-edge continuum is the future:

The trend is toward seamless integration of all layers (edge, fog, cloud) with unified orchestration.

Feedback

I developed new skills in hybrid architectures and their applications in modern computing environments. I was able to understand and use all the concepts covered. However, I realized that I will need to practice these tools in the future to avoid forgetting them, as I do not currently have the opportunity to use them in my apprenticeship company.

Since the Kubernetes session was completed quickly with my partner, we had to rush through and simply run the commands. Thanks to the report we wrote, it was easier to understand the concepts afterwards.

My opinion

This course was very interesting. I had the chance to learn many concepts that I hear in daily life for certain professions. This course will be truly useful for my career where these concepts could be applied.

The course content was well structured, starting from the basics of virtualization and gradually progressing to more complex topics. This progression helped me better understand and then broaden my knowledge toward more advanced concepts.

I now feel more confident in designing, deploying and managing scalable cloud-edge solutions.

Professional applications:

- DevOps Engineer: deployment of containerized applications on Kubernetes, CI/CD

- Cloud Architect: design of hybrid and multi-cloud cloud infrastructures

- IoT Engineer: deployment of edge architectures for local data processing

- System Administrator: management of virtualized infrastructures and OpenStack

- Telecom Engineer: MEC integration in 5G networks

Links with other courses:

- Emerging Network Technologies: SDN for network virtualization in the cloud

- Middleware for IoT: IoT protocols deployed on edge/cloud

- Embedded IA for IoT: AI inference on edge devices

- Service Oriented Architecture: microservices deployed in the cloud

Course Documents

Cours Complet Cloud & Edge Computing Full Cloud & Edge Computing Course

Cours complet sur le Cloud & Edge Computing : virtualisation, modeles de service, OpenStack, Edge Computing, Fog Computing, MEC, continuum cloud-edge. Complete course on Cloud & Edge Computing: virtualization, service models, OpenStack, Edge Computing, Fog Computing, MEC, cloud-edge continuum.

Reports and Projects

Rapport de Projet Cloud Computing Cloud Computing Project Report

Ouvrir le rapport complet Open the full report

Ouvrir le sujet de TP Open the lab subject

Course taken in 2024-2025 at INSA Toulouse, Department of Electrical and Computer Engineering, ISS specialization.

</div>